Welcome to part 3 of the Logical Data Warehouse with Serverless SQL Pools series. In this part we’ll be covering Incrementally loading Fact data when new sales data becomes available in the Data Lake. We’ll also be looking at a possible solution to implement Slowly Changing Dimensions to track changes over time when certain source system attributes are changed and we need to keep a history of these changes.

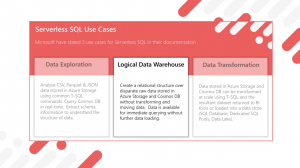

In Part 1 we introduced the data warehousing scenario, the source system data model, covered creating a new Serverless SQL Pools database, connections to the Azure Data Lake, and then creating Views over the source CSV stored in the Date Lake to query the data.

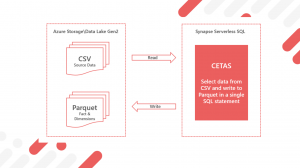

In Part 2 we covered querying the source Views data and using the CREATE EXTERNAL TABLE AS SELECT (CETAS) syntax to transform and load CSV data from the Data Lake into a Dimension and Fact structure (Star Schema) and save as Parquet file format.

- Creating a Logical Data Warehouse with Synapse Serverless SQL: Part 1 of 4 – Setting Up and Querying Source Data

- Creating a Logical Data Warehouse with Synapse Serverless SQL: Part 2 of 4 – Creating a Dimensional Model

- Creating a Logical Data Warehouse with Synapse Serverless SQL: Part 3 of 4 – Incremental Fact Loading and Slowly Changing Dimensions

- Creating a Logical Data Warehouse with Synapse Serverless SQL: Part 4 of 4 – Connecting Power BI to the Dimensional Model

Data and Code

All SQL code and data required for each part in this blog series is available on GitHub here. All SQL code for part 3 is available in the create_incremental_and_scd_objects.sql file.

New and Changed Data to Load

In our data warehousing scenario we have Sales Order data that is being written to the Data Lake in a YYYY-MM-DD dated folder structure, each days sales are saved to a separate folder. We now have new Sales Orders and Sales Order Lines CSV files to load for 2 days. There has also been an update to an existing Supplier to change their Supplier Category, this change we must track over time. We will reflect these changes to the Supplier as a Type 2 Slowly Changing Dimension in that we will create a new Supplier dimension row whilst still keeping the old value for historical purposes. There has also been a new Supplier added which must be added to the dimension.

New Sales Data and New and Changed Supplier data can be found on GitHub here.

- The Sales data needs placing in the following new folders. YYYY-MM-DD must be replaced with the date of the data.

- /sourcedatapartitionsalesorder/YYYY-MM-DD/

- /sourcedatapartitionsalesorderline/YYYY-MM-DD/

- The Supplier data needs placing in /sourcedatasystem/changeddata/2021-06-22/Purchasing_Suppliers/

Manual CETAS to load new Sales Order data

We’ll start by loading the 18th April CSV data by specifying a date folder named as YYYY-MM-DD in the LOCATION parameter in the CETAS statement and using the FilePathDate column in the source View to only select the data we need to load. The FilePathDate column in the View is the result of the filepath() function which can be used as a filter to only select/scan the requested folder. This reduces the amount of data processed as only the required folder and therefore data within the folder is scanned.

As with Part 2, the External Table only exists to load data to the Azure Data Lake folder and can be dropped after the load process has completed. We use Views to query the loaded Fact data.

CREATE EXTERNAL TABLE STG.FactSales

WITH

(

LOCATION = 'conformed/facts/factsales/incremental/2021-04-18',

DATA_SOURCE = ExternalDataSourceDataLake,

FILE_FORMAT = SynapseParquetFormat

)

AS

SELECT

--Surrogate Keys

DC.CustomerKey,

CAST(FORMAT(SO.OrderDate,'yyyyMMdd') AS INT) as OrderDateKey,

DSI.StockItemKey,

DS.SupplierKey,

--Degenerate Dimensions

CAST(SO.OrderID AS INT) AS OrderID,

CAST(SOL.OrderLineID AS INT) AS OrderLineID,

--Measure

CAST(SOL.Quantity AS INT) AS SalesOrderQuantity,

CAST(SOL.UnitPrice AS DECIMAL(18,2)) AS SalesOrderUnitPrice

FROM LDW.vwSalesOrdersLines SOL

INNER JOIN LDW.vwSalesOrders SO ON SOL.OrderID = SO.OrderID

LEFT JOIN LDW.vwDimCustomer DC ON DC.CustomerID = SO.CustomerID

LEFT JOIN LDW.vwDimStockItem DSI ON DSI.StockItemID = SOL.StockItemID

LEFT JOIN LDW.vwStockItems SI ON SI.StockItemID = DSI.StockItemID

LEFT JOIN LDW.vwDimSupplier DS ON DS.SupplierID = SI.SupplierID

WHERE SOL.FilePathDate = '2021-04-18' AND SO.FilePathDate = '2021-04-18';Dynamic SQL with a Stored Procedure to load Sales Data

Although the SQL code above will load new data, we cannot parameterise the LOCATION value to change the folder where data is stored. To transform the above code into a more flexible process, we can use dynamic SQL to create the LOCATION parameter and also select the source data using a single date input parameter. The SQL code below will create a Stored Procedure which takes a single Date parameter as input, selects source data based on this date parameter, then writes the data out to a destination folder using the same date parameter.

CREATE PROCEDURE STG.FactSalesLoad @ProcessDate DATE

WITH ENCRYPTION

AS

BEGIN

DECLARE @location varchar(100)

IF OBJECT_ID('STG.FactSales') IS NOT NULL

DROP EXTERNAL TABLE STG.FactSales

SET @location = CONCAT('conformed/facts/factsales/incremental/',FORMAT (@ProcessDate, 'yyyy/MM/dd') )

DECLARE @CreateExternalTableString NVARCHAR(2000)

SET @CreateExternalTableString =

'CREATE EXTERNAL TABLE STG.FactSales

WITH

(

LOCATION = ''' + @location + ''',

DATA_SOURCE = ExternalDataSourceDataLake,

FILE_FORMAT = SynapseParquetFormat

)

AS

SELECT

--Surrogate Keys

DC.CustomerKey,

CAST(FORMAT(SO.OrderDate,''yyyyMMdd'') AS INT) as OrderDateKey,

DSI.StockItemKey,

DS.SupplierKey,

--Degenerate Dimensions

CAST(SO.OrderID AS INT) AS OrderID,

CAST(SOL.OrderLineID AS INT) AS OrderLineID,

--Measure

CAST(SOL.Quantity AS INT) AS SalesOrderQuantity,

CAST(SOL.UnitPrice AS DECIMAL(18,2)) AS SalesOrderUnitPrice

FROM LDW.vwSalesOrdersLines SOL

INNER JOIN LDW.vwSalesOrders SO ON SOL.OrderID = SO.OrderID

LEFT JOIN LDW.vwDimCustomer DC ON DC.CustomerID = SO.CustomerID

LEFT JOIN LDW.vwDimStockItem DSI ON DSI.StockItemID = SOL.StockItemID

LEFT JOIN LDW.vwStockItems SI ON SI.StockItemID = DSI.StockItemID

LEFT JOIN LDW.vwDimSupplier DS ON DS.SupplierID = SI.SupplierID

WHERE SOL.FilePathDate = ''' + CAST(@ProcessDate AS CHAR(10)) + ''' AND SO.FilePathDate = ''' + CAST(@ProcessDate AS CHAR(10)) + ''''

EXEC sp_executesql @CreateExternalTableString

END;Now we can run the Proc which will load the source CSV data into the required destination date folder.

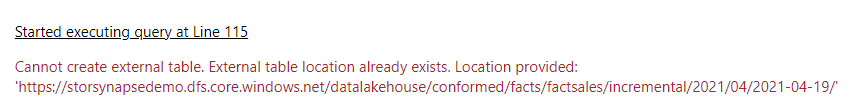

EXEC STG.FactSalesLoad '2021-04-19';Before we continue, let’s run the Stored Procedure again with the same date parameter…

EXEC STG.FactSalesLoad '2021-04-19';We’ll get an error stating that the “location already exists” even though we have dropped the External Table in the Stored Procedure. The current DROP EXTERNAL TABLE command will drop the table from the database but the underlying folder and files in the Data Lake will not be deleted.

Slowly Changing Dimensions

When we look at loading changed data for dimensions that must be tracked over time, we have to be aware that Serverless SQL Pools currently does not support updating data in the Data Lake, it is an append-only process in that files can be added to the underlying storage but we cannot run SQL to change existing data. However, we can load new and changed dimension data into new destination folders under the root dimension folder.

Select and Load the Supplier Data Changes

The SQL code below will create a View which targets selecting changed data for Suppliers.

CREATE VIEW LDW.vwIncrementalSuppliers

AS

SELECT fct.*,

fct.filepath(1) AS FilePathDate

FROM

OPENROWSET

(

BULK 'sourcedatasystem/ChangedData/*/Purchasing_Suppliers/*.csv',

DATA_SOURCE = 'ExternalDataSourceDataLake',

FORMAT = 'CSV',

PARSER_VERSION = '2.0',

HEADER_ROW = TRUE,

FIELDTERMINATOR ='|'

) AS fctThe SQL code below will now write the new and changed data out to a sub-folder /02 in the current Supplier dimension folder. This can be amended to use dynamic SQL as seen in the Sales Order process. We first select the maximum surrogate key from the current dimension data and use this to continue the sequence when writing the changed and new data. Within the CSV file is a date column which indicates when the source data changed, we can use this as our ValidFrom value.

DECLARE @MaxKey TINYINT

SELECT @MaxKey = MAX(SupplierKey) FROM LDW.vwDimSupplier

IF OBJECT_ID('STG.DimSupplier') IS NOT NULL

DROP EXTERNAL TABLE STG.DimSupplier;

CREATE EXTERNAL TABLE STG.DimSupplier

WITH

(

LOCATION = 'conformed/dimensions/dimsupplier/02',

DATA_SOURCE = ExternalDataSourceDataLake,

FILE_FORMAT = SynapseParquetFormat

)

AS

SELECT CAST(ROW_NUMBER() OVER(ORDER BY S.SupplierID) AS TINYINT) + @MaxKey AS SupplierKey,

S.SupplierID,

S.SupplierName,

SC.SupplierCategoryName,

CAST(S.ValidFrom AS DATE) AS ValidFromDate

FROM LDW.vwIncrementalSuppliers S

LEFT JOIN LDW.vwSupplierCategories SC ON SC.SupplierCategoryID = S.SupplierCategoryID

WHERE S.FilePathDate = '2021-06-22'

ORDER BY S.SupplierID;Selecting data from the Supplier Dimension

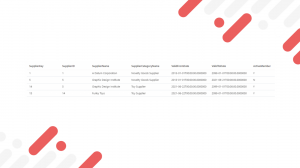

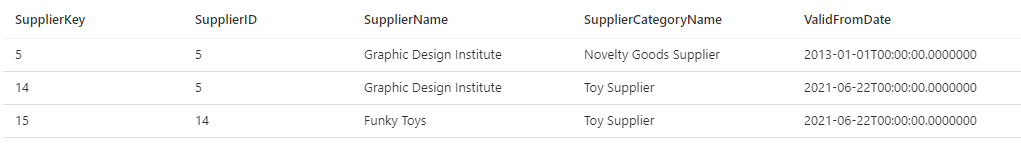

If we now query the existing View to select data from the Supplier dimension, we get all the existing data, the changed data, and the new data. However, we are missing vital columns which are required to flag the date range validity of a dimension row.

SELECT *

FROM LDW.vwDimSupplier

WHERE SupplierID IN (5,14)

ORDER BY SupplierID;

In the image above we see the result which shows that SupplierID 5 has changed Supplier Category.

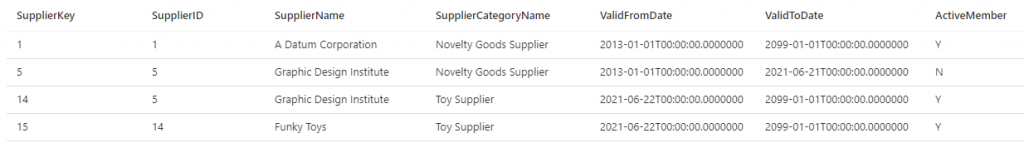

Create View to construct a complete SCD Type 2 Dimension

We can use the single ValidFrom date to calculate the ValidTo and also calculate the ActiveMember flag for each dimension row. We use the LEAD function and partition by the SupplierID (source system business key) to generate contiguous date ranges. Please note that we can also use datetime values and change the DATEADD accordingly.

CREATE VIEW LDW.vwDimSupplierSCD

AS

SELECT SupplierKey,

SupplierID,

SupplierName,

SupplierCategoryName,

ValidFromDate,

ISNULL(DATEADD(DAY,-1,LEAD(ValidFromDate,1) OVER (PARTITION BY SupplierID ORDER BY SupplierKey)),'2099-01-01') AS ValidToDate,

CASE ROW_NUMBER() OVER(PARTITION BY SupplierID ORDER BY SupplierKey DESC) WHEN 1 THEN 'Y' ELSE 'N' END AS ActiveMember

FROM LDW.vwDimSupplierNow when we select from the new Dimension View we are able to see date ranges and which row is the current active member.

SELECT *

FROM LDW.vwDimSupplierSCD

WHERE SupplierID IN (1,5,14)

ORDER BY SupplierID,SupplierKey

Amend Fact Loading Stored Procedure

We can now use the SCD version of the Supplier dimension view LDW.vwDimSupplierSCD and use the Sales Order date to select the correct dimension value at the time the Sales Order was created. We have added an additional JOIN to the new dimension view using the Sales Order date and the Supplier ValidFrom and ValidTo dates.

CREATE PROCEDURE STG.FactSalesLoadCSD @ProcessDate DATE

WITH ENCRYPTION

AS

BEGIN

DECLARE @location varchar(100)

IF OBJECT_ID('STG.FactSales') IS NOT NULL

DROP EXTERNAL TABLE STG.FactSales

SET @location = CONCAT('conformed/facts/factsales/incremental/',FORMAT (@ProcessDate, 'yyyy/MM/dd') )

DECLARE @CreateExternalTableString NVARCHAR(2000)

SET @CreateExternalTableString =

'CREATE EXTERNAL TABLE STG.FactSales

WITH

(

LOCATION = ''' + @location + ''',

DATA_SOURCE = ExternalDataSourceDataLake,

FILE_FORMAT = SynapseParquetFormat

)

AS

SELECT

--Surrogate Keys

DC.CustomerKey,

CAST(FORMAT(SO.OrderDate,''yyyyMMdd'') AS INT) as OrderDateKey,

DSI.StockItemKey,

DS.SupplierKey,

--Degenerate Dimensions

CAST(SO.OrderID AS INT) AS OrderID,

CAST(SOL.OrderLineID AS INT) AS OrderLineID,

--Measure

CAST(SOL.Quantity AS INT) AS SalesOrderQuantity,

CAST(SOL.UnitPrice AS DECIMAL(18,2)) AS SalesOrderUnitPrice

FROM LDW.vwSalesOrdersLines SOL

INNER JOIN LDW.vwSalesOrders SO ON SOL.OrderID = SO.OrderID

LEFT JOIN LDW.vwDimCustomer DC ON DC.CustomerID = SO.CustomerID

LEFT JOIN LDW.vwDimStockItem DSI ON DSI.StockItemID = SOL.StockItemID

LEFT JOIN LDW.vwStockItems SI ON SI.StockItemID = DSI.StockItemID

LEFT JOIN LDW.vwDimSupplierSCD DS ON DS.SupplierID = SI.SupplierID AND SO.OrderDate BETWEEN DS.ValidFromDate AND DS.ValidToDate

WHERE SOL.FilePathDate = ''' + CAST(@ProcessDate AS CHAR(10)) + ''' AND SO.FilePathDate = ''' + CAST(@ProcessDate AS CHAR(10)) + ''''

EXEC sp_executesql @CreateExternalTableString

ENDConclusion

During parts 1, 2, and 3 we have worked through the process of creating a new Serverless SQL Pools database and creating Views to query source CSV data in an Azure Data Lake Gen2 account. We then looked at the process of writing data back to the Data Lake using CREATE EXTERNAL TABLE AS SELECT syntax to transform and save the source data as Parquet file format and as denormalised (flattened) dimension data. In the final part we looked at how to incrementally load Fact data and also how to track changes to dimension data over time.

Further Thoughts

In Part 2 we loaded all the Sales Order data for all the available dates into a single /initial/ folder. However, in the real-world our source data may be large and therefore we would want to load data into YYYY-MM-DD at the beginning of the process to partition the data. The incremental loading Stored Procedure can be used for this process with a loop in place to load each day of data.

Many thanks for reading this blog and if you have any questions please reach out on Twitter.

5 Responses

[…] Welcome to Part 4 in the Creating a Logical Data Warehouse with Synapse Severless SQL Pools series. We are now looking at connecting Power BI to Serverless SQL Pools to use the dimensional model we have created for reporting and analytics purposes. We can now create data visualisations in Power BI to analyse the Parquet file data stored in the Azure Data Lake storage account. The process to read source CSV data and transform into Parquet using Serverless SQL Pools has been discussed in Parts 1, 2, and 3. […]

[…] The process to read source CSV data and transform into Parquet using Serverless SQL Pools has been discussed in Parts 1, 2, and 3. […]

Dear Andy,

Thanks very much for this wonderful article. I just wanted to ask one thing about this process automation. How we could automate these queries for creating external tables and views on serverless data warehouse through Data Factory or Other Methods. We need to refresh the fact, dimension, and summary tables daily.

Thanks

HI Andy,

How the files in ADLS get deleted? External table will through error when we rerun. How this is managed?

Brilliant Mr. Andy. This blog is gold